There has been a scarcity of posts on the blog lately, as I’ve been working on a web application for the site. This is a page anyone can use to estimate their knowledge of Chinese words. The start page for the test is here.

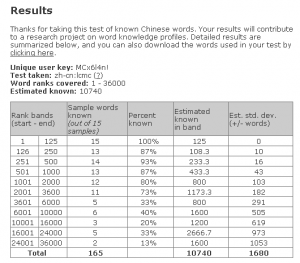

The look and feel of the test is similar to a typical flashcard program, with buttons to show the pinyin and English for a presented word, and buttons for marking the word known or unknown. The main difference is that unknown words are not repeated. For the test, 165 sample words out of the top 36,000 in the Lancaster Corpus of Mandarin Chinese are selected at random, and pinyin and English definitions from CC-CEDICT are added. After submitting the results (and answering a few brief questions), you can see the detailed score, which shows how the estimated known word count is calculated. The known/unknown word scores are extrapolated from the samples, split into 11 different segments by frequency.

For the next few months, I will be collecting results (anonymously, except for a random token to enable repeat testing), which I plan to use in a research project I’ve been working on. My interest is in word knowledge over a wide range of frequencies, and how that varies between individuals. I’m not affiliated with a university, so this whole project is just a hobby. Nevertheless, I hope to end up with some published results

FAQ

Where are the traditional characters?

The best frequency list in traditional characters that I’ve managed to find is at Taiwan’s Ministry of Education site. According to their licensing application, “本資料檔案僅係授權使用,而非販售賣斷” (File archives are authorized for use only, and not sold outright). That’s a rather brief statement compared to a typical English language EULA, so it’s not clear whether I’d be able to use the results of trials that used their data. I will submit an application to them using their form, and see if it gets approved. In the meantime, the code to use traditional characters is ready to use, so if I ever find another decent frequency list, I can use that one.

Why are words like “苏联” (Soviet Union) ranked as frequent words?

The LCMC was compiled around the years 1990-1993, and most of their non-fiction texts are from news reports around 1991. In compiling my word frequency list from the LCMC data, I excluded texts from categories D (Religion) and H (reports, official documents), to lessen the chance of these anomalies.

The definition it gave me isn’t right. Where can I submit a change?

All definitions are from CC-CEDICT. To submit a request for a change, look up the word in the MDBG dictionary, then click on the “correct this entry” icon for the found word.

I just want to take the test. Do I need to answer the survey questions?

No, you can leave them all blank and just take the test for fun. At some point after the research period is over, the survey questions will be removed.

How is standard deviation calculated?

theor. variance(N; K; S) = K(N-K)(N-S)/(N-1)/S

N = number of items in the range

K = number of known words (the true number, not the estimate from the trial)

S = number of samples (15 in this trial)

It’s an equation I derived myself from the combinatorics, but it’s possible that this equation, or something similar, has been derived before by someone else. Note that in this situation, the actual value of K is unknown to us, and using the value obtained by extrapolating the samples is a rough approximation. This probably violates a number of statistical laws. In other words, this is a rough estimate!

I get a blank page, or an error

Please use the Contact form to report any problems. This is a new application that hasn’t been heavily tested against a large number of browsers. To maximize the performance of the application, it relies heavily on Javascript to run the test, avoiding any network communication until the trial is finished. Any issues are likely an error in the Javascript or a bug in the web page coding.

Why would I put my email address in the survey?

This field is entirely optional. There are two uses for that field. One is to send out a one-time announcement at the conclusion of the project. The other is as a way to follow up, so that if I get data that throws my whole hypothesis into question, I have some chance at finding out why. If you’re at all worried about giving it out, definitely leave it blank.

6 Comments to 'An application to estimate known Chinese words'

March 28, 2011

This is great – the detailed information at the end of the test really is useful, as is the option to download a list of the words used. Can I ask what specifically the research is on? Or are you keeping it quiet until the project is complete?

March 28, 2011

Thanks for your comment! I don’t want to give out too much information yet to guard against biasing the results. But the investigation is generally looking at knowledge of the frequent words versus the very rare words and what it says about the person’s knowledge and word acquisition. Sorry this isn’t more detailed, but stay tuned!

June 4, 2011

Hi there, just to let you know that I did your test, and found it interesting enough that I blogged about it …

http://mandarinsegments.blogspot.com/2011/06/so-how-many-words-do-i-know.html

June 4, 2011

A problem here seems to be that even a tiny deviation in the high rank banks will increase the estimated word count by a huge number. I know a few (very few) rare words that you would not really expect a beginner to know. When I took the test, just one of these that appeared by chance bumped the estimate by 400, taking it way over what I consider realistic for a beginner like me.

There might not be a good way to tackle this problem though (other than increasing the size of the test sample significantly, which is not practical).

Do you know of any other good free corpora? I’m interested both in tagged an untagged ones. I have played with the LCMC before, but it was the only one I found.

June 5, 2011

@Greg – Thanks for the post, and I wrote a reply on your site. To summarize here, the estimates tend to be high because of rare words that can be easily figured out.

@Szabolcs – There is definitely inaccuracy due to the number of samples. I had to strike a balance between too few words with high error, versus many words and either scaring people off or giving mental fatigue to the brave souls who tried it. I include a column with the reported results for estimated standard deviation in each word group, and the errors can be quite large for a particular slice. But when the individual slices are combined, the errors are mitigated; the worst theoretical error you can get for the test is a standard deviation of +/-1400 words.

I definitely *don’t* know a good free corpus outside of the LCMC, although I would certainly like to know. There are private corpora compiled by research groups for their own purpose, but they are reluctant to distribute them due to copyright concerns. There may be a web search interface to a particular corpus, but the texts themselves are inaccessible. See this previous post which links to a few corpus sites, although I had no luck finding an accessible corpus there.

June 5, 2011

@Chad, one suggestion: add an undo button. I think it’s too easy to click the wrong button (known/unknown) when going through the words quickly, especially when using the keyboard. It would be nice to be able to go back and correct it.