I have had my online vocabulary extraction tool available on the web for a while now. I have gotten a lot of use out of it myself, as my primary interest has been to develop more vocabulary to increase reading ability. The application generally works ok, but it suffers from some technical issues. Because it loads the entire CC-CEDICT every time it runs, it taxes the shared hosting provider a lot, to the point where the script crashes unpredictably, especially for larger texts. It also requires manual intervention to keep the dictionary up to date, and adding more dictionaries takes a lot of additional effort.

Meanwhile, for the past year I’ve been working on a similar program that can be used offline. It has been working well, is a little faster, and is easier to drop in newer versions of the CC-CEDICT dictionary. I have spent a few months adding a little more polish to it, and now am releasing it as open source software. At this point, it is available for Windows systems. The source code is also available, which would allow it to be used on nearly any system. More details are at the project page and the documentation page. Here are some screenshots to demonstrate its functionality:

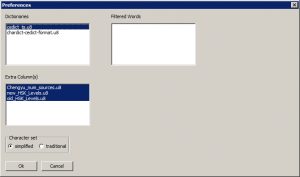

Dictionaries can be changed within the program, for example, to switch from a word list to a character usage list. Filtered word lists remove words from results. “Extra Column” files are additional data about words that can be included in the results. This software can also switch between simplied and traditional characters, a feature the web application is missing.

Additional dictionaries, filters, and word data can be dropped into the appropriate directory.

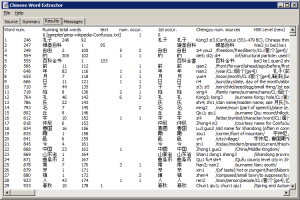

Texts can be opened from a file dialog, or can be typed or pasted into an editor window. After analyzing the text, the results will be displayed as a tab-separated table, suitable for copying and pasting into spreadsheet programs.

Enjoy!

Brilliant! I’ve been hoping you’d do something like this for a while, as I want to compile vocab lists out of some very large texts. Any chance of a Linux build?

In theory it should work, as the source code is available and only requires libraries for wxPython, and chardet from the python-chardet package . However, having just tried it on a new Ubuntu install, wxPython isn’t available as a package from the official sources, and it’s being oddly difficult to build from the source RPM at the moment.

The page at http://wiki.wxpython.org/InstallingOnUbuntuOrDebian claims they have their own alternate repository, so that sounds more promising.

Ok, the installation from wxpython.org works great. Here are the commands from a clean install of Ubuntu 11:

lsb_release -a

# No LSB modules are available.

# Distributor ID: Ubuntu

# Description: Ubuntu 11.04

# Release: 11.04

# Codename: natty

wget http://apt.wxwidgets.org/key.asc -O – | sudo apt-key add –

# if it waits without going back to the prompt, it’s waiting for the sudo password

sudo nano /etc/apt/sources.list

# replace “natty” with appropriate codename if different Ubuntu version

# # wxWidgets/wxPython repository at apt.wxwidgets.org

# deb http://apt.wxwidgets.org/ natty-wx main

# deb-src http://apt.wxwidgets.org/ natty-wx main

sudo apt-get update

sudo apt-get install python-wxgtk2.8 python-wxtools wx2.8-i18n

sudo apt-get install subversion

sudo apt-get install python-chardet

mkdir ~/CWE

cd ~/CWE

svn export http://svn.zhtoolkit.com/ChineseWordExtractor/trunk/

cd trunk

python main.py &

Working! Great stuff, thanks a lot

Thanks for putting all the effort and work in creating this wonderfull piece of software. It’s amazing the attention to detail and care you had when creating this. Obviously it’s from someone who is himself “eating your own dog food” , using it for learning chinese himself.

I also very much appreciate your very detailed writings and thoughts concerning segmentation, word frequencies and other chinese text processing related stuff. Pitty it isn’t discussed more deeply in chinese-forums.com

I’d also like to report that it compiles and works flawlessly in Ubuntu 32 or 64 bit versions.

Regarding list of word frequencies, have you seen the SUBTLEX project ?

http://www.plosone.org/article/info:doi/10.1371/journal.pone.0010729

They make freely available all their data for free. Have a look at their word and char frequencies. I have been comparing it with the Lancaster LCMC and found it really very very interesting.

Another project I would like to call your attention, because you used python this time is the CJKlib, http://cjklib.org/ . Provides lots of interesting things you can do with decomposition by char and components. They use CC-cdict.

By the way I was curious to know why did you decided to use python this time ? Having seen the online version of Zhtoolkit, I thought you were a Perl guy. What were your thoughts regarding the choice of segmentation ? Had you used previously the simple Perl segmenter by Serge Sharoff on the Perl version, http://corpus.leeds.ac.uk/tools/zh/ ?

And finally some suggestions, if you could find the time to

1. Command line interface and automation

One of the interesting things I would like to do with my texts and the CSV resultant file is to make use of all the other text processing tools I know and integrate it in scripts (sed, awk, etc)

My goal is to have my original text file procesed with the help of the word list file. In the end I want to have just a single file “my own text” annotated with all the words I want to be there. This will make my reading “undisturbed”. I dont neeed to be constantly switching between the text and the word list. I just want one file.

I have tried to go through the python code, but I’m afraid I’m no programmer.

Any hints how to do a command line usage of your program ?

2. The second idea would be to have an extra column on the CSV file, indicating for which word wich dictionary does the definition comes from. This way one could do a sort of “conditional” processing.

For example, if word a,b and c definition comes from the CC-CDICt dictionary, use this and insert it right in the middle of the text.

If word d,e or f definition comes from another dictionary, place that at the start or ending of the text. Or in a pull quote (HTML), so that it doesnt disrupt my reading flow. This would be for example the case for words I have on one of “my own difficult-keep-forgetting-words” wordlist. I dont want to see again the CC-Cdict definition, I want to see my own definition with my own examples in the “dictionary” that I created before.

@Cstudent, thanks for your comments. I wasn’t able to respond immediately, but I’ll answer what I can now.

I had read the paper, but I didn’t peek at the resulting word list, assuming that a corpus based on scripts would lead to some odd word frequencies. But actually, it could be useful for some purposes, so I’ll take another look.

Python is much easier to make an executable out of than Perl. I was impressed by great projects like Anki and Calibre.

Our versions are both based on the same source, Erik Peterson’s scripts at http://www.mandarintools.com. You can still see the influence in my online segmenter which uses his data for the Chinese name detection. I hadn’t noticed Serge Sharoff’s version which added some heuristic improvements, but I’m very interested in looking through it. Thanks!

I had planned for command line use, but hadn’t gotten around to implementing any options. Your request will increase the priority.

If you mean like the column on the online segmenter, this may be easy to add. I’ll see what it will take.

Also based on that point, I can foresee the need for an option whether multiple dictionaries should merge their definitions or replace them, with one having higher priority. Right now I think the first listed dictionary takes precedence.

Thanks for all your comments!