In my Chinese studies, the Lancaster Corpus of Mandarin Chinese (LCMC) has been a useful source of data—word and character frequencies, collocations, phrase usage, parts of speech, etc. The corpus is freely available for non-commercial and research use. However, the native form of its data is in a set of XML files, which is not an easy format to work with. In addition, the XML data is slow to read data from, because all those XML tags and the entire data structure needs to be parsed. A much better format for the data is an SQL database. Stored in a database, many kinds queries and reports can be executed very efficiently. Depending on the software, these queries and reports can return results very quickly, much faster than in the XML format.

I have made available a Perl script and some other related tools to assist with extracting the LCMC files into a SQLite database. SQLite is a lightweight relational database management system intended for portability and ease of use. Because it functions as a standalone program (not client-server), it is easy to install and use. It’s more ubiquitous than you might think. It’s how the Firefox and Chrome browsers stores its history, cookies, and preferences. But it’s also used, for example, by the Anki program as the storage format for flashcard data, and by the Calibre e-reader program to store information on installed e-books.

Obtaining the Corpus

The home page for the LCMC is at http://www.lancs.ac.uk/fass/projects/corpus/LCMC/. Of the two links on the page for the distribution locations, the Oxford Text Archive link is the only one that allows for direct downloading. Follow the link to the Oxford Text Archive, browse the archives, and search for Lancaster Corpus of Mandarin Chinese in the “Corpora” tab. The LCMC is ID 2474. The download link is directly on the detail page. After reading the Terms and Conditions, enter your email to receive the link to the actual download location. Clicking on the link in the email message will immediately download the file, 2474.zip (6.5MB).

Creating the SQL Database

The scripts can be downloaded as a zip file from here, or as individual files in the archive. You will need Perl installed on your machine, along with a few necessary modules that may need to be downloaded separately. Full information on the required steps can be found in the README file.

If the data conversion was successful, you will now have a 297 MB file called lcmc.db3 in the current directory.

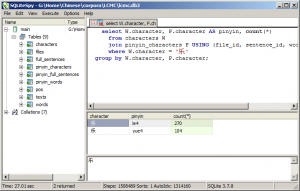

As for client access to the newly created SQLite database, you will need software that can handle Unicode, so that the Chinese characters can be displayed. For Windows, SQLiteSpy is my personal choice. Queries are fast, the interface is intuitive, and it has Unicode and support for regular expressions built in. For Linux, both Sqliteman and SQLite Manager (a Firefox plug-in) are adequate. One common software package for Linux, SQLite Database Browser is not suitable, because the LCMC database is large, and the program works by trying to load it all into memory at once.

Some Sample Queries

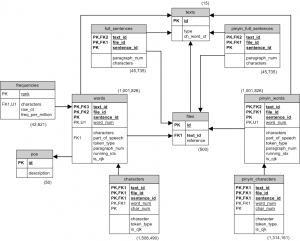

In a relational database, information is organized into separate tables of data and linked by unique keys for each record. This arrangement makes it efficient to perform many kinds of queries. Below are just a few examples of the kind of reports that be done.

Raw frequency counts of all Chinese words in the corpus

SELECT characters, COUNT('x')

FROM words

WHERE token_type = 'w'

AND is_cjk = 'Y'

GROUP BY characters ORDER BY count('x') DESC, characters ASC;

| characters | COUNT(‘x’) |

|---|---|

| 的 | 51141 |

| 了 | 12787 |

| 是 | 11601 |

| 在 | 10420 |

| 一 | 8380 |

| 和 | 7297 |

| 他 | 5898 |

| 不 | 5687 |

| 我 | 5576 |

| … | … |

List all words tagged as prepositions

SELECT W.characters, P.id, P.description, COUNT(*) from words W

JOIN pos P on W.part_of_speech = P.id

WHERE P.description = 'preposition'

GROUP BY W.characters, P.id, P.description

ORDER BY COUNT(*) DESC;

| characters | id | P.description | COUNT(*) |

|---|---|---|---|

| 在 | p | preposition | 9899 |

| 对 | p | preposition | 3155 |

| 把 | p | preposition | 1989 |

| 从 | p | preposition | 1782 |

| 为 | p | preposition | 1702 |

| 与 | p | preposition | 1432 |

| 以 | p | preposition | 1355 |

| 被 | p | preposition | 1245 |

| … | … | … | … |

List the average sentence length per text category

SELECT T.id, T.type, ROUND(AVG(LENGTH(F.characters)), 1) as avg_sentence_length

FROM full_sentences F

JOIN texts T on F.text_id = T.id

GROUP BY T.id, T.type;

| id | type | avg_sentence_length |

|---|---|---|

| A | Press reportage | 34.0 |

| B | Press editorial | 36.6 |

| C | Press review | 42.5 |

| D | Religion | 41.2 |

| E | Skills, trades and hobbies | 32.5 |

| F | Popular lore | 33.0 |

| G | Biographies and essays | 31.0 |

| H | Miscellaneous (reports, official documents) | 42.3 |

| J | Science (academic prose) | 40.3 |

| K | General fiction | 24.3 |

| L | Mystery and detective fiction | 28.4 |

| M | Science fiction | 33.4 |

| N | Martial art fiction | 28.6 |

| P | Romantic fiction | 25.8 |

| R | Humour | 20.8 |

List usage of words containing the character 给

SELECT W.characters, P.id, P.description, count(*) from words W

JOIN pos P on W.part_of_speech = P.id

WHERE W.characters LIKE '%给%'

GROUP BY W.characters, P.id, P.description

ORDER BY COUNT(*) DESC;

| characters | id | description | count(*) |

|---|---|---|---|

| 给 | p | preposition | 985 |

| 给 | v | verb | 149 |

| 给予 | v | verb | 92 |

| 交给 | v | verb | 54 |

| 送给 | v | verb | 37 |

| 递给 | v | verb | 19 |

| 供给 | vn | verb with nominal function | 16 |

| 发给 | v | verb | 15 |

| … | … | … |

All words and corresponding pinyin

select W.file_id, W.sentence_id, W.word_num, W.characters, P.characters AS pinyin from words W

left join pinyin_words P USING (file_id, sentence_id, word_num);

| file_id | sentence_id | word_num | characters | pinyin |

|---|---|---|---|---|

| A01 | 0001 | 1 | 大 | da4 |

| A01 | 0001 | 2 | 墙 | qiang2 |

| A01 | 0001 | 3 | 内外 | nei4wai4 |

| A01 | 0001 | 4 | -- | -- |

| A01 | 0001 | 5 | 北京市 | bei3jing1shi4 |

| A01 | 0001 | 6 | 监狱 | jian1yu4 |

| A01 | 0001 | 7 | 纪实 | ji4shi2 |

| A01 | 0001 | 8 | ( | ( |

| A01 | 0001 | 9 | 三 | san1 |

| A01 | 0001 | 10 | ) | ) |

Pinyin usage of character 乐

select W.character, P.character AS pinyin, count(*)

from characters W

join pinyin_characters P USING (file_id, sentence_id, word_num, char_num)

where W.character = '乐'

group by W.character, P.character;

| character | pinyin | count(*) |

|---|---|---|

| 乐 | le4 | 270 |

| 乐 | yue4 | 104 |

These are just a few examples of what you can do with the corpus data in the SQL database. Have fun!

http://www.zhtoolkit.com/apps/small_utilities/lcmc_xml_to_sqlite.zip (69Kb)